Prior to the election, I announced a test of whether Dominion machines correlate to the 2022 results. This test consisted of taking the Real Clear Average polls prior the election and comparing them to actual results, using the percentage of Dominion machine usage in a given state as a predictor.

The 2022 results were the Dominion effect was 4.06% with a one-sided p-value just under 10%

Background

Ten days ago I wrote what type of statistical tests I would do regarding Dominion Election Machines.

I warned that all eyes were on the Arizona governor’s race, where the Republican was ahead (slightly), the Democrat candidate was in charge of her own certification process, and Maricopa County uses Dominion machines–which would have been targeted for cancellation if the Republican won.

Unfortunately, the machine processes failed in some areas, at some points in time, in Maricopa County. I do not know which type of machines (printer vs. tabulator) or which brand of machines caused this issue. I read various “fact checks” that try to blame the printing companies not the tabulators, but the fact of the matter is, the official statement came from video from Maricopa County itself via twitter, which said “20% of the locations have an issue with the tabulators”. Watch the video for yourself, if it is still available. (Here is an article about the person on the right in the video.) I have no knowledge of what machines caused this issue.

So…. where does that leave us?

Unfortunately, it means that a large number of individuals in our society will not trust the outcome of this Arizona election, independently of any statistics I am about to produce.

Having said that, here are some stats to consider.

Stats

Table 1 shows the number of elections (for governor or for senate) that were included in the study. Note that the Oregon governor polls were a tie going into the election, so I excluded Oregon from this table to make it easier to read.

Table 1: Overall View| Category | Total | Republicans Predicted to Win | Republicans Actually Win | Democrats Predicted to Win | Democrats Actually Win | Change |

|---|---|---|---|---|---|---|

| Dominion Less than 15% of Potential Voters | 10 | 6 | 6 | 4 | 4 | 0 |

| Dominion Higher than 15% of Potential Voters | 13 | 6 | 2 | 7 | 11 | 4 |

| Total | 23 | 12 | 8 | 11 | 15 | 4 |

Table 2 shows you the data that is used in the statistical analysis. The first two columns with numbers are the value that Democrats were higher (or lower) than the Republicans. The difference is the change from the predicted to actual.

Table 2: Data for Statistical Test| State | Election | RCP Avg 11-5-22 Dem. Advantage | RCP Actual 11-16-22 | Difference (Y Variable) | Dominion Percent (X Variable) |

|---|---|---|---|---|---|

| Oklahoma | Governor | -1 | -13.6 | -12.6 | 0% |

| Oregon | Governor | 0 | 3.4 | 3.4 | 0% |

| Texas | Governor | -9.2 | -11 | -1.8 | 0% |

| Florida | Governor | -11.5 | -19.4 | -7.9 | 8% |

| Minnesota | Governor | 4.3 | 7.7 | 3.4 | 14% |

| Pennsylvania | Governor | 10.7 | 14.4 | 3.7 | 19% |

| Wisconsin | Governor | -0.2 | 3.4 | 3.6 | 31% |

| Michigan | Governor | 4.4 | 10.5 | 6.1 | 54% |

| New York | Governor | 6.2 | 5.7 | -0.5 | 55% |

| Arizona | Governor | -1.8 | 0.6 | 2.4 | 60% |

| Colorado | Governor | 11.7 | 19.3 | 7.6 | 92% |

| Nevada | Governor | -1.8 | -1.3 | 0.5 | 98% |

| New Mexico | Governor | 4 | 6.4 | 2.4 | 100% |

| Connecticut | Senate | 11 | 15.2 | 4.2 | 0% |

| New Hampshire | Senate | 0.7 | 9.1 | 8.4 | 0% |

| North Carolina | Senate | -5.2 | -3.5 | 1.7 | 0% |

| Washington | Senate | 3 | 14.8 | 11.8 | 1% |

| Florida | Senate | -7.5 | -16.4 | -8.9 | 8% |

| Ohio | Senate | -6.7 | -6.5 | 0.2 | 13% |

| Pennsylvania | Senate | -0.1 | 4.5 | 4.6 | 19% |

| Wisconsin | Senate | -2.8 | -1 | 1.8 | 31% |

| Arizona | Senate | 1 | 4.9 | 3.9 | 60% |

| Colorado | Senate | 5.3 | 14.6 | 9.3 | 92% |

| Nevada | Senate | -2.4 | 0.9 | 3.3 | 98% |

Table 3 shows you the output of a statistical regression.

The first field called “intercept” is how far off the polls were from the actual results. The value is 0.66, meaning the general population appeared to vote 0.66% more for Democrats than the pre-election polls. The P-value is 68% which is generally considered not significant. This means the polls were not far off from the election results.

The next field is the number of points the model estimates were moved based on how much of the state was covered by Dominion machines. If the state is 100% covered by Dominion, the expected change from pre-election poll to actual results is 4.06%. For example, if the pre-election polls were a tie, 50/50, going into the election, this model predicts a fully Dominion state would be 52.03/47.97.

A generic least squares model would do a two-tailed t-test, which would produce a p-value of 20%. However, when I announced the test 10 days ago, I pre-announced that we were testing for a positive coefficient. This means that the correct test is a one-sided test. The p-value using this test is 9.99% which is approximately 10%. This means that, given the test I announced prior to the election, the probability that we would obtain a coefficient of 4.06 or higher is about 10%. In other words, this test had a 1 in 10 chance of achieving a Dominion coefficient this high or higher.

Table 3: Results| Coefficient | Value | P-Value |

|---|---|---|

| Intercept (How far off were polls, after adjusting for Dominion Effect): | 0.66 | 68% |

| Dominion Coefficient (Percentage point change based on what proportion of voters are covered by Dominion) | 4.06 | 9.99% |

Here is the spreadsheet for you to see the math.

What does this mean?

It could mean nothing. Statistics are random.

However, the article you are currently reading and my article from 2020 show:

- There are correlations from 2022 which correspond to people’s suspicions of fraud.

- There are correlations from 2020 which correspond to people’s suspicions of fraud.

Producing correlations two elections in a row should make the general public understand that there may be something going wrong, and it continues to be appropriate to push for transparency.

At the moment about 100,000,000 Americans do not trust the results of the 2020 election, as polls show 1/3 of the more than 300,000,000 Americans do not trust the results.

For our society to stop having 100,000,000 people who do not feel like their election choices are being fairly counted, it is an imperative that society do one of the three:

- Develop a process to make the overwhelming majority feel that machine voting is fair; this is urgently needed for Dominion, for example;

or

- Stop using machine voting;

or

- Stop using specific machine brands that have not developed a process for people to verify and feel comfortable that the machines are fair.

Stats for Nerds

Okay, above I showed the outcome of a pre-announced test.

If you are interested, here are a few other things to consider, some strengthen the theory and some weaken it.

Question 1: From a data perspective, what really happened?

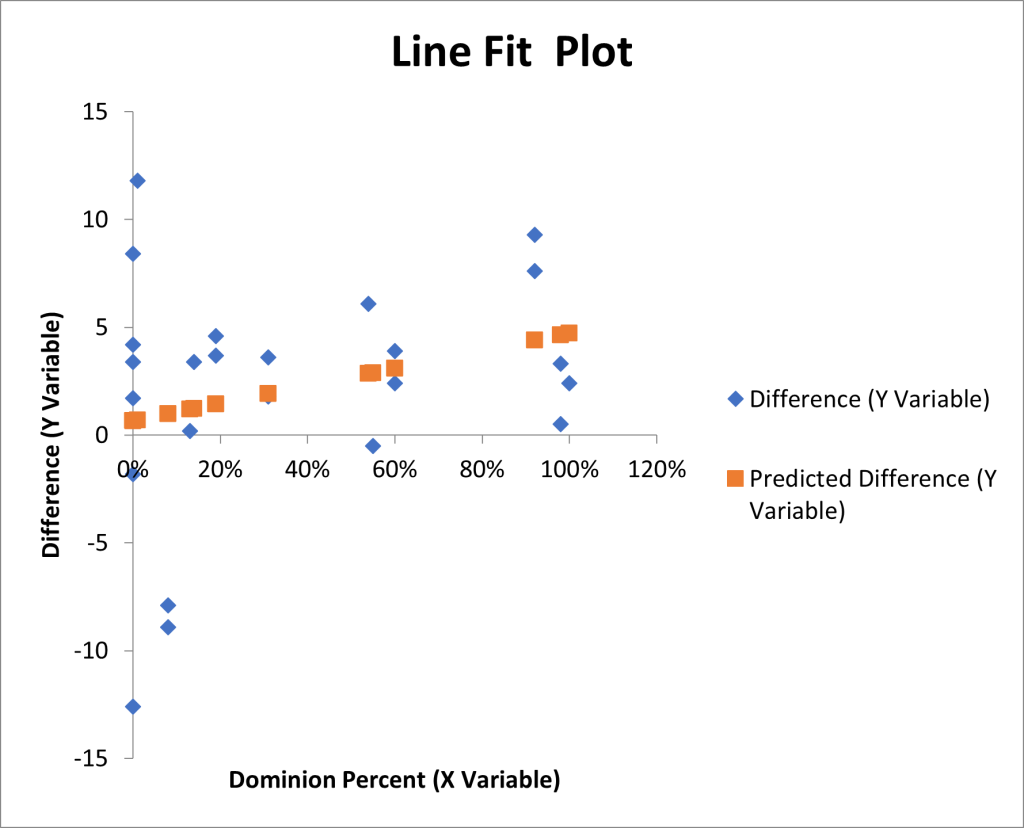

Answer: Florida, Oklahoma, and Texas, which are not heavy Dominion states broke hard for Republicans, while most of the other places broke Democrat. Some states that were not heavily Dominion broke hard for Democrats, such as New Hampshire and Washington. If the theory about Dominion machines were true, we would not have expected the New Hampshire and Washington to break this way. The below chart illustrates the results and the modeled output, using the model above. Notice the wide range of values where Dominion % is close to 0. This is not a sign of a particularly good model.

Question 2: Did the polls miss how the Hispanic vote would break?

Some people theorize that the polls underestimated that the Hispanic vote would swing Republican, which is why Florida, Texas, and to some extent New York didn’t follow the rest of the nation which went more Democrat than some anticipated.

Answer: If you add Hispanic vote to the regression, it does show Hispanic vote swinging towards Republicans, and the Dominion effect strengthening to 7.54, with a one-sided t-test with a p-value of 1.7%. If this theory were true (that Hispanics broke for Republicans but Dominion broke for Democrats), it would be a reason why Nevada, Arizona, and New Mexico still ended up mostly Democrat while Florida and Texas went hard for Republicans.

However, I am not in favor of adding variables to a model of this type with so few observations.

Attached is a spreadsheet a friend of mine made.

Question 3: Did the Real Clear Politics polls change after you took the data over the weekend prior to the election?

Yes. And I went back and looked at them after the election, and I did not feel I could use what the website showed. In some states it stopped showing the “RCP Average” and just showed individual polls.

Question 4: Shouldn’t the real test be the actual outcome of the election, meaning win or lose, not whether Florida runs up the scoreboard?

Answer: I think that is a good way to look at how this should be tested. To do this, I ran a test on the outcomes, win or lose, and used a logit regression. There was a warning generated which indicates it may not be a good fit. The results were that the Dominion effect was 3.44 with a two-sided p-value of 32%, which I think would correspond to a one-sided p-value of 16%. Again, there were warnings, which indicates that there was not enough data points for this type of regression to be a good fit. Attached is the data and the R code:

Question 5: Were there any races that went against the Dominion theory?

Answer: Yes. Nevada is a heavy Dominion state, yet the governor went to the Republican. The Repulican in Wisconsin winning in a close race for senate is also interesting.

Question 6: What do you really think about this overall test?

Answer: It was interesting to make a hypothesis prior to the election and test it. However, I don’t think there really are enough data points in this test to get a good fit. I think the work I did in 2020 was much more comprehensive because it had much more data. I do note that both tests showed a correlation. Two tests in a row should strengthen the need for transparency. When I first heard the theory about Dominion, it sounded crazy to me, but the correlations are there.